FastX 3.3 Clustering Quick Reference

This document provides a quick cheat sheet of how to set up a cluster for FastX 3.3 and later

Restarting services

When you change a configuration, you should restart the service

FastX compute node: sudo service fastx3 restart

FastX cluster manager: sudo service fastx-cluster restart

FastX NATS Server: sudo service fastx-nats restart

MongoDB: sudo service mongod restart

Setting up a single cluster manager

The cluster manager is the heart of the cluster. The cluster manager connects the database which holds the clustering information with the compute nodes which run the sessions.

The following is to set up a single cluster manager. Click here for fault tolerance and high availability cluster managers.

On the cluster manager run the following

curl https://www.starnet.com/files/private/FastX3/setup-fastx-cluster.sh | sudo bash –Copy /etc/fastx/broker.json from the cluster manager to each of your compute nodes

chown fastx.fastx /etc/fastx/broker.jsonUploading the configuration from a compute node to the cluster

A default FastX installation starts in standalone mode where all configuration is located on the FastX server. You can migrate the configuration from the standalone to the cluster.

- This only needs to be done on ONE of the compute nodes

- Make sure the cluster manager is running

- Copy the /etc/fastx/broker.json from the cluster manager to the compute node

- Run the following command to upload the standalone configuration to the cluster manager

/usr/lib/fastx/3/nodejs/bin/node /usr/lib/fastx/3/microservices/tools/upload-store.js config –bjf /etc/fastx/broker.json –dir /var/fastx/local/storeConfigure load balancing

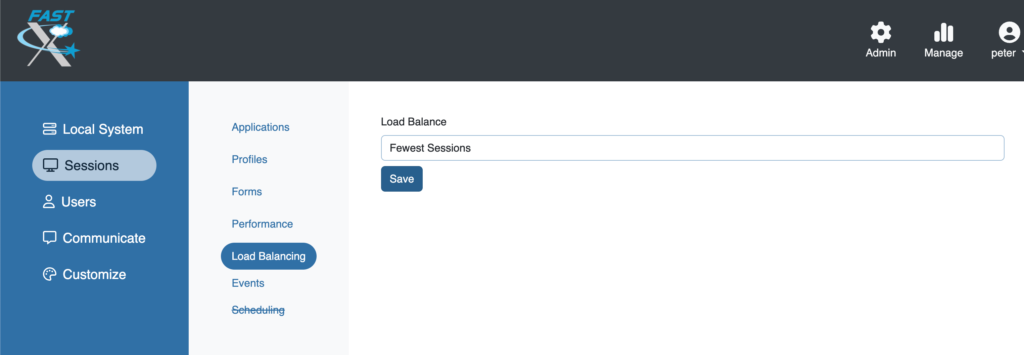

Load balancing allows you to start sessions on multiple machines and evenly distribute the sessions across the cluster. See Custom load balancing for advanced load balancing types.

- Log in as an Admin

- Click on the Admin Button

- Sessions > Load Balancing > Fewest Sessions > Save

Fault tolerant and high availability cluster managers

By default, FastX uses a single cluster manager with both the cluster manager and database stored on the same system. This can result in a single point of failure if the cluster manager is unavailable. You can add high availability and fault tolerance to the cluster by doing the following

Create a set of high availability cluster managers

Cluster managers hold the transport configuration which connect the database to the compute nodes.

Set up multiple cluster managers

Define your set of cluster managers

In our example we have N systems designated as cluster managers

cluster1.example.com

cluster2.example.com

cluster3.exmaple.com

...

clusterN.example.com

Install the base package on each system

On each cluster manager (we recommend a minimum of 3) run the following command.

curl https://www.starnet.com/files/private/FastX3/setup-fastx-cluster.sh ) bash -Add the MongoDB database to the replica set on each system

FastX uses MongoDB database for storage. Set up a replica set on multiple systems to enable fault tolerance on your database.

MongoDB holds the configuration and the state of the cluster. Without this component, the cluster loses all information. In a High Availability environment, it is important to configure MongoDB to work as a Replica Set. Please refer to the MongoDB documentation for a full discussion.

Edit the /etc/mongod.conf file to be set up as a replica set and to listen on all addresses

replication:

replSetName: "rs0"

net:

bindIp: 0.0.0.0

Restart mongod: sudo service mongod restart

Only on cluster1.example.com

Initialize the MongoDB replica set

Open a terminal and run: mongosh

Initiate the replica set

rs.initiate( {

_id : "rs0",

members: [

{ _id: 0, host: "cluster1.example.net:27017" },

{ _id: 1, host: "cluster2.example.net:27017" },

{ _id: 2, host: "cluster3.example.net:27017" }

]

})For more information on MongoDB replica sets and advanced communication including TLS security see the following articles

- Basic Tutorial on How to Deploy a Replica Set

https://docs.mongodb.com/manual/tutorial/deploy-replica-set/ - Documentation index on MongoDB replication

https://docs.mongodb.com/manual/administration/replica-set-deployment/ - Url String for a Replica Set

https://docs.mongodb.com/manual/reference/connection-string/ - Set up MongoDB Security

https://docs.mongodb.com/manual/security/

Edit /etc/fastx/db.json

Edit /etc/fastx/db.json on each cluster manager to point to the MongoDB replica set, then restart the FastX cluster manager service.

Note the url has the 3 replica set mongo installations in the url. For more information see https://docs.mongodb.com/manual/reference/connection-string/

{

“url”:“mongodb://cluster1.example.com:27017,cluster2.example.com:27017,cluster3.example.com:27017/fastx”,

“options”: {

“useUnifiedTopology”: true,

“replicaSet”: “rs0”,

“readPreference”: “primaryPreferred”

}

}Edit /etc/fastx/nats-server.json

Edit /etc/fastx/nats-server.json on each cluster manager to configure the transporters to talk to each other as a cluster then restart the FastX NATS service.

- Each cluster manager should be specified in the routes portion of the cluster section

# Client port of 4222 on all interfaces

port: 4222

authorization {

token: thisisasecret

}

tls {

cert_file: /etc/pki/tls/certs/fedora-self.nats.crt

key_file: /etc/pki/tls/private/fedora-self-key.nats.txt

}

# This is for clustering multiple servers together.

cluster {

# Route connections to be received on any interface on port 6222

port: 6222

# Routes are protected, so need to use them with --routes flag

authorization {

user: ruser

password: T0pS3cr3t

timeout: 2

}

# Routes are actively solicited and connected to from this server.

routes = [

nats-route://10.211.55.9:6222,

nats-route://10.211.55.4:6222,

nats-route://10.211.55.8:6222

]

}Edit /etc/fastx/broker.json

Edit the /etc/fastx/broker.json to configure the broker to connect to the fault tolerant cluster then restart the FastX cluster manager service.

- Note that each cluster manager is specified in the “servers” section

- Note that the port is 4222 which is the client port

- Note that the token is the same as the authorization token in /etc/fastx/nats-server.conf

{

"transporterType": "nats",

"namespace": "fastx-cluster",

"rejectUnauthorized": false,

"options": {

"servers": [

"nats://10.211.55.4:4222",

"nats://10.211.55.9:4222",

"nats://10.211.55.8:4222"

],

"token": "thisisasecret"

}

}On cluster2…clusterN.example.com

Copy the files

Copy the following files from cluster1.example.com to the other cluster managers

/etc/fastx/broker.json

/etc/fastx/db.json

/etc/fastx/nats-server.conf

Restart the services

service mongod restart

service fastx-nats restart

service fastx-cluster restart

On the FastX Compute Nodes

Copy the new /etc/fastx/broker.json from cluster1.example.com to each of the compute nodes and restart the FastX service

Configuring FastX for Multiple Systems

FastX uses a distributed database and local files to save configuration. The database allows for automatic synchronization for configuration across a cluster of servers. If you are configuring standalone servers you may want to automatically distribute configuration when setting up the server. The following steps will guide you through this process.

Set up a License Server

- On a system central to all servers, Instal the License Server

- On each session server, run /usr/lib/fastx/3/install.sh

- When asked if there is a license server on the network select yes

- Add the hostname to point to the license server

Configure the First Server

- Install FastX

- Run the post installation script /usr/lib/fastx/3/install.sh

- Log in to the Browser Client as a FastX Administrator

- Configure the FastX Server According to your needs

Export the FastX Configuration

Local configuration is located in the /etc/fastx directory. Any configuration that is local to this system only (not to the entire cluster) is located in this directory. It is Best Practice to make a backup copy of your default config directory. Copy the default configuration into each server so that the individual servers can change them later.

Clusters

Configuration global to your cluster is located in the database. If you are using clustering, this information is automatically synced across the cluster.

Standalone Servers

If you are not using clustering, or want to make a backup the database is stored in files located at /var/fastx/local/store. Copy these files to the same location in other systems to set them up.

Configuring Other Servers

- Install FastX

- Run the post installation script in quiet mode: /usr/lib/fastx/3/install.sh -q

- Copy the default local configuration from the first server into /etc/fastx

- Copy the /var/fastx/local/store directory the new system

- Copy the license.lic file into /var/fastx/license

- Restart the FastX service